In this Python Programming Tutorial, we will be learning how to set up a Python development environment in VSCode on Windows. VSCode is a very nice free edit. Here's the second module of the free Introduction to Python Programming course.I'll start by discussing why I chose Python to teach the fundamentals of progr. (Optional) Use a neural network to increase accuracy # The first step is to import the required libraries and to create the model. In this case, you'll use a Sequential neural. After defining the model, the next step is to add the layers of the neural network. For now, let's keep things simple. If you're in 'Visual Studio Code', in File Explorer (left hand column), you see a file 'settings.json'. Click on it, and when it opens in the editor, add the path to your Python executable, like this (but with your own path).

Working with Python in Visual Studio Code, using the Microsoft Python extension, is simple, fun, and productive. The extension makes VS Code an excellent Python editor, and works on any operating system with a variety of Python interpreters. It leverages all of VS Code's power to provide auto complete and IntelliSense, linting, debugging, and unit testing, along with the ability to easily switch between Python environments, including virtual and conda environments.

This article provides only an overview of the different capabilities of the Python extension for VS Code. For a walkthrough of editing, running, and debugging code, use the button below.

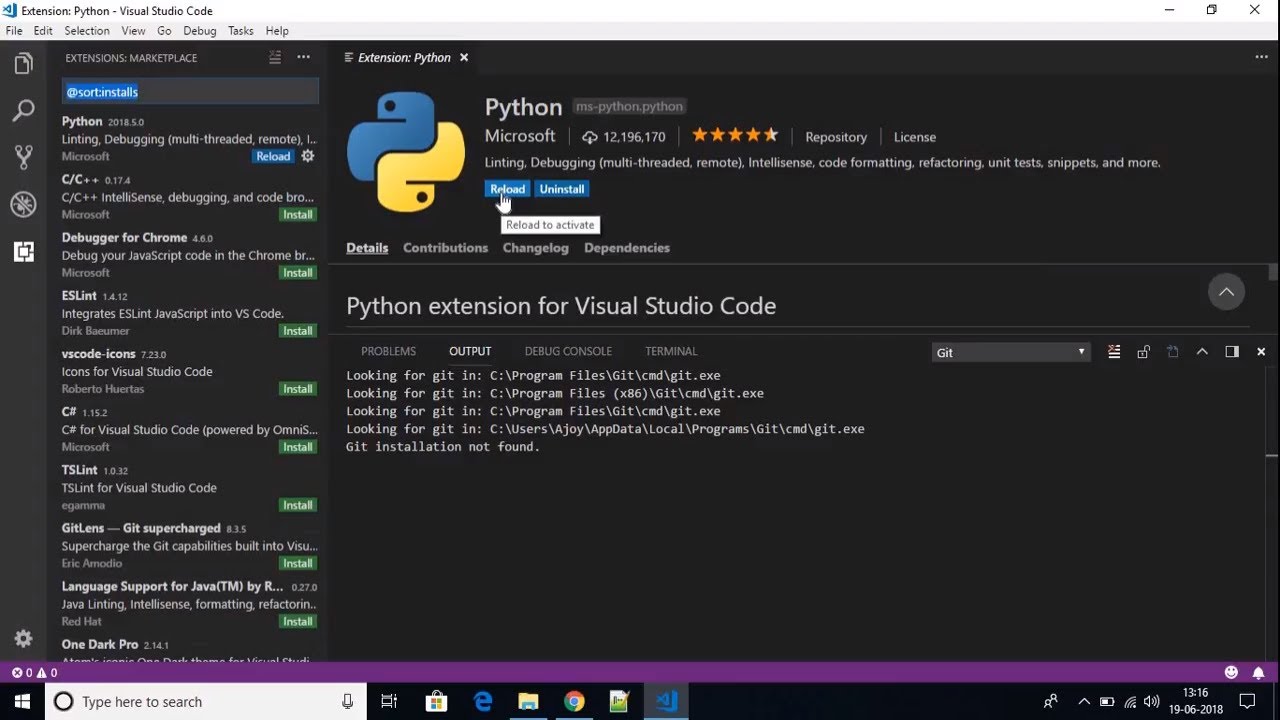

Install Python and the Python extension

The tutorial guides you through installing Python and using the extension. You must install a Python interpreter yourself separately from the extension. For a quick install, use Python 3.7 from python.org and install the extension from the VS Code Marketplace.

Once you have a version of Python installed, activate it using the Python: Select Interpreter command. If VS Code doesn't automatically locate the interpreter you're looking for, refer to Environments - Manually specify an interpreter.

You can configure the Python extension through settings. Learn more in the Python Settings reference.

Windows Subsystem for Linux: If you are on Windows, WSL is a great way to do Python development. You can run Linux distributions on Windows and Python is often already installed. When coupled with the Remote - WSL extension, you get full VS Code editing and debugging support while running in the context of WSL. To learn more, go to Developing in WSL or try the Working in WSL tutorial.

Insiders program

The Insiders program allows you to try out and automatically install new versions of the Python extension prior to release, including new features and fixes.

If you'd like to opt into the program, you can either open the Command Palette (⇧⌘P (Windows, Linux Ctrl+Shift+P)) and select Python: Switch to Insiders Daily/Weekly Channel or else you can open settings (⌘, (Windows, Linux Ctrl+,)) and look for Python: Insiders Channel to set the channel to 'daily' or 'weekly'.

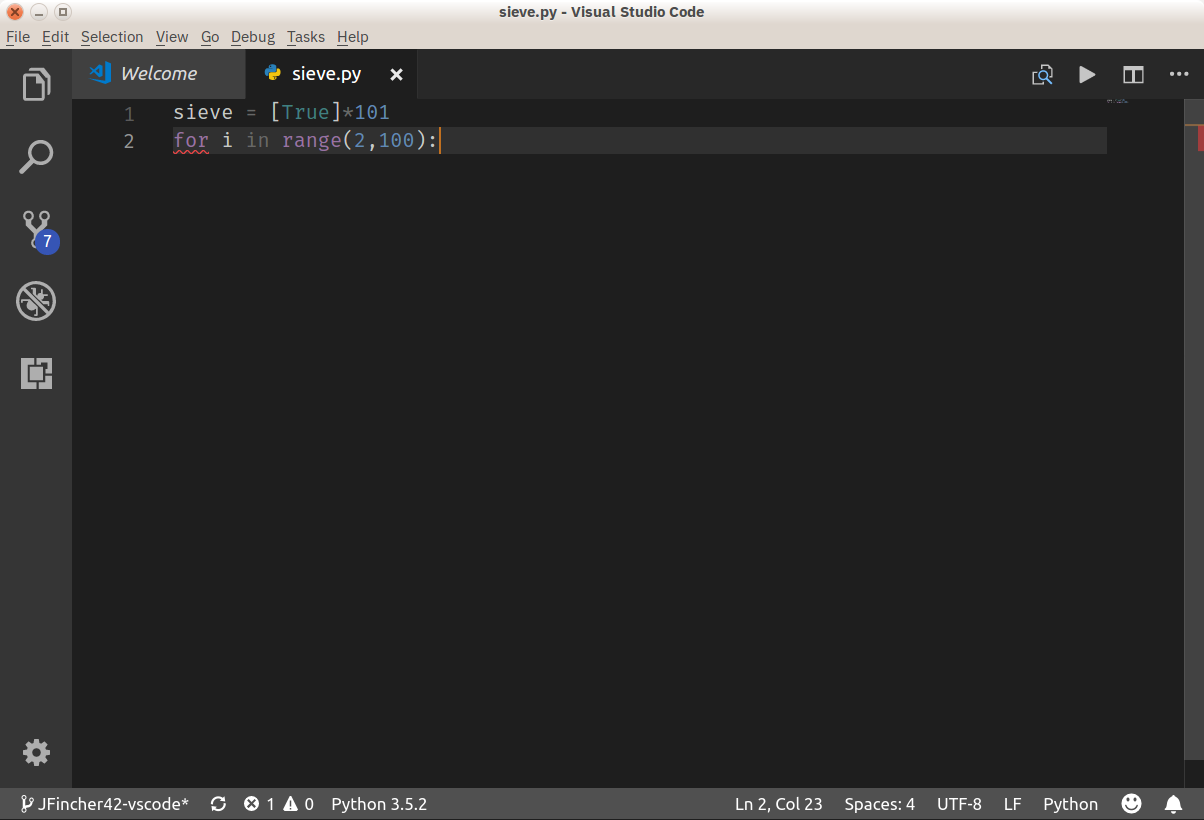

Run Python code

To experience Python, create a file (using the File Explorer) named hello.py and paste in the following code (assuming Python 3):

The Python extension then provides shortcuts to run Python code in the currently selected interpreter (Python: Select Interpreter in the Command Palette):

- In the text editor: right-click anywhere in the editor and select Run Python File in Terminal. If invoked on a selection, only that selection is run.

- In Explorer: right-click a Python file and select Run Python File in Terminal.

You can also use the Terminal: Create New Integrated Terminal command to create a terminal in which VS Code automatically activates the currently selected interpreter. See Environments below. The Python: Start REPL activates a terminal with the currently selected interpreter and then runs the Python REPL.

For a more specific walkthrough on running code, see the tutorial.

Autocomplete and IntelliSense

The Python extension supports code completion and IntelliSense using the currently selected interpreter. IntelliSense is a general term for a number of features, including intelligent code completion (in-context method and variable suggestions) across all your files and for built-in and third-party modules.

IntelliSense quickly shows methods, class members, and documentation as you type, and you can trigger completions at any time with ⌃Space (Windows, Linux Ctrl+Space). You can also hover over identifiers for more information about them.

Tip: Check out the IntelliCode extension for VS Code (preview). IntelliCode provides a set of AI-assisted capabilities for IntelliSense in Python, such as inferring the most relevant auto-completions based on the current code context.

Linting

Linting analyzes your Python code for potential errors, making it easy to navigate to and correct different problems.

The Python extension can apply a number of different linters including Pylint, pycodestyle, Flake8, mypy, pydocstyle, prospector, and pylama. See Linting.

Debugging

No more print statement debugging! Set breakpoints, inspect data, and use the debug console as you run your program step by step. Debug a number of different types of Python applications, including multi-threaded, web, and remote applications.

For Python-specific details, including setting up your launch.json configuration and remote debugging, see Debugging. General VS Code debugging information is found in the debugging document. The Django and Flask tutorials also demonstrate debugging in the context of those web apps, including debugging Django page templates.

Environments

The Python extension automatically detects Python interpreters that are installed in standard locations. It also detects conda environments as well as virtual environments in the workspace folder. See Configuring Python environments. You can also use the python.pythonPath setting to point to an interpreter anywhere on your computer.

The current environment is shown on the left side of the VS Code Status Bar:

The Status Bar also indicates if no interpreter is selected:

The selected environment is used for IntelliSense, auto-completions, linting, formatting, and any other language-related feature other than debugging. It is also activated when you use run Python in a terminal.

To change the current interpreter, which includes switching to conda or virtual environments, select the interpreter name on the Status Bar or use the Python: Select Interpreter command.

VS Code prompts you with a list of detected environments as well as any you've added manually to your user settings (see Configuring Python environments).

Installing packages

Packages are installed using the Terminal panel and commands like pip install (Windows) and pip3 install (macOS/Linux). VS Code installs that package into your project along with its dependencies. Examples are given in the Python tutorial as well as the Django and Flask tutorials.

Jupyter notebooks

If you open a Jupyter notebook file (.ipynb) in VS Code, you can use the Jupyter Notebook Editor to directly view, modify, and run code cells.

You can also convert and open the notebook as a Python code file. The notebook's cells are delimited in the Python file with #%% comments, and the Python extension shows Run Cell or Run All Cells CodeLens. Selecting either CodeLens starts the Jupyter server and runs the cell(s) in the Python interactive window:

Opening a notebook as a Python file allows you to use all of VS Code's debugging capabilities. You can then save the notebook file and open it again as a notebook in the Notebook Editor, Jupyter, or even upload it to a service like Azure Notebooks.

Using either method, Notebook Editor or a Python file, you can also connect to a remote Jupyter server for running the code. For more information, see Jupyter support.

Testing

How To Open Python In Visual Studio Code

The Python extension supports testing with the unittest, pytest, and nose test frameworks.

To run tests, you enable one of the frameworks in settings. Each framework also has specific settings, such as arguments that identify paths and patterns for test discovery.

Once discovered, VS Code provides a variety of commands (on the Status Bar, the Command Palette, and elsewhere) to run and debug tests, including the ability to run individual test files and individual methods.

Configuration

The Python extension provides a wide variety of settings for its various features. These are described on their relevant topics, such as Editing code, Linting, Debugging, and Testing. The complete list is found in the Settings reference.

Other popular Python extensions

The Microsoft Python extension provides all of the features described previously in this article. Additional Python language support can be added to VS Code by installing other popular Python extensions.

- Open the Extensions view (⇧⌘X (Windows, Linux Ctrl+Shift+X)).

- Filter the extension list by typing 'python'.

The Insiders program allows you to try out and automatically install new versions of the Python extension prior to release, including new features and fixes.

If you'd like to opt into the program, you can either open the Command Palette (⇧⌘P (Windows, Linux Ctrl+Shift+P)) and select Python: Switch to Insiders Daily/Weekly Channel or else you can open settings (⌘, (Windows, Linux Ctrl+,)) and look for Python: Insiders Channel to set the channel to 'daily' or 'weekly'.

Run Python code

To experience Python, create a file (using the File Explorer) named hello.py and paste in the following code (assuming Python 3):

The Python extension then provides shortcuts to run Python code in the currently selected interpreter (Python: Select Interpreter in the Command Palette):

- In the text editor: right-click anywhere in the editor and select Run Python File in Terminal. If invoked on a selection, only that selection is run.

- In Explorer: right-click a Python file and select Run Python File in Terminal.

You can also use the Terminal: Create New Integrated Terminal command to create a terminal in which VS Code automatically activates the currently selected interpreter. See Environments below. The Python: Start REPL activates a terminal with the currently selected interpreter and then runs the Python REPL.

For a more specific walkthrough on running code, see the tutorial.

Autocomplete and IntelliSense

The Python extension supports code completion and IntelliSense using the currently selected interpreter. IntelliSense is a general term for a number of features, including intelligent code completion (in-context method and variable suggestions) across all your files and for built-in and third-party modules.

IntelliSense quickly shows methods, class members, and documentation as you type, and you can trigger completions at any time with ⌃Space (Windows, Linux Ctrl+Space). You can also hover over identifiers for more information about them.

Tip: Check out the IntelliCode extension for VS Code (preview). IntelliCode provides a set of AI-assisted capabilities for IntelliSense in Python, such as inferring the most relevant auto-completions based on the current code context.

Linting

Linting analyzes your Python code for potential errors, making it easy to navigate to and correct different problems.

The Python extension can apply a number of different linters including Pylint, pycodestyle, Flake8, mypy, pydocstyle, prospector, and pylama. See Linting.

Debugging

No more print statement debugging! Set breakpoints, inspect data, and use the debug console as you run your program step by step. Debug a number of different types of Python applications, including multi-threaded, web, and remote applications.

For Python-specific details, including setting up your launch.json configuration and remote debugging, see Debugging. General VS Code debugging information is found in the debugging document. The Django and Flask tutorials also demonstrate debugging in the context of those web apps, including debugging Django page templates.

Environments

The Python extension automatically detects Python interpreters that are installed in standard locations. It also detects conda environments as well as virtual environments in the workspace folder. See Configuring Python environments. You can also use the python.pythonPath setting to point to an interpreter anywhere on your computer.

The current environment is shown on the left side of the VS Code Status Bar:

The Status Bar also indicates if no interpreter is selected:

The selected environment is used for IntelliSense, auto-completions, linting, formatting, and any other language-related feature other than debugging. It is also activated when you use run Python in a terminal.

To change the current interpreter, which includes switching to conda or virtual environments, select the interpreter name on the Status Bar or use the Python: Select Interpreter command.

VS Code prompts you with a list of detected environments as well as any you've added manually to your user settings (see Configuring Python environments).

Installing packages

Packages are installed using the Terminal panel and commands like pip install (Windows) and pip3 install (macOS/Linux). VS Code installs that package into your project along with its dependencies. Examples are given in the Python tutorial as well as the Django and Flask tutorials.

Jupyter notebooks

If you open a Jupyter notebook file (.ipynb) in VS Code, you can use the Jupyter Notebook Editor to directly view, modify, and run code cells.

You can also convert and open the notebook as a Python code file. The notebook's cells are delimited in the Python file with #%% comments, and the Python extension shows Run Cell or Run All Cells CodeLens. Selecting either CodeLens starts the Jupyter server and runs the cell(s) in the Python interactive window:

Opening a notebook as a Python file allows you to use all of VS Code's debugging capabilities. You can then save the notebook file and open it again as a notebook in the Notebook Editor, Jupyter, or even upload it to a service like Azure Notebooks.

Using either method, Notebook Editor or a Python file, you can also connect to a remote Jupyter server for running the code. For more information, see Jupyter support.

Testing

How To Open Python In Visual Studio Code

The Python extension supports testing with the unittest, pytest, and nose test frameworks.

To run tests, you enable one of the frameworks in settings. Each framework also has specific settings, such as arguments that identify paths and patterns for test discovery.

Once discovered, VS Code provides a variety of commands (on the Status Bar, the Command Palette, and elsewhere) to run and debug tests, including the ability to run individual test files and individual methods.

Configuration

The Python extension provides a wide variety of settings for its various features. These are described on their relevant topics, such as Editing code, Linting, Debugging, and Testing. The complete list is found in the Settings reference.

Other popular Python extensions

The Microsoft Python extension provides all of the features described previously in this article. Additional Python language support can be added to VS Code by installing other popular Python extensions.

- Open the Extensions view (⇧⌘X (Windows, Linux Ctrl+Shift+X)).

- Filter the extension list by typing 'python'.

The extensions shown above are dynamically queried. Click on an extension tile above to read the description and reviews to decide which extension is best for you. See more in the Marketplace.

Next steps

- Python Hello World tutorial - Get started with Python in VS Code.

- Editing Python - Learn about auto-completion, formatting, and refactoring for Python.

- Basic Editing - Learn about the powerful VS Code editor.

- Code Navigation - Move quickly through your source code.

This tutorial demonstrates using Visual Studio Code and the Microsoft Python extension with common data science libraries to explore a basic data science scenario. Specifically, using passenger data from the Titanic, you will learn how to set up a data science environment, import and clean data, create a machine learning model for predicting survival on the Titanic, and evaluate the accuracy of the generated model.

Prerequisites

The following installations are required for the completion of the tutorial. If you do not have them already, install them prior to beginning.

The Python extension for VS Code from the Visual Studio Marketplace. For additional details on installing extensions, see Extension Marketplace. The Python extension is named Python and published by Microsoft.

Note: If you already have the full Anaconda distribution installed, you don't need to install Miniconda. Alternatively, if you'd prefer not to use Anaconda or Miniconda, you can create a Python virtual environment and install the packages needed for the tutorial using pip. If you go this route, you will need to install the following packages: pandas, jupyter, seaborn, scikit-learn, keras, and tensorflow.

Set up a data science environment

Visual Studio Code and the Python extension provide a great editor for data science scenarios. With native support for Jupyter notebooks combined with Anaconda, it's easy to get started. In this section, you will create a workspace for the tutorial, create an Anaconda environment with the data science modules needed for the tutorial, and create a Jupyter notebook that you'll use for creating a machine learning model.

How To Use Python In Visual Studio Code

Begin by creating an Anaconda environment for the data science tutorial. Open an Anaconda command prompt and run

conda create -n myenv python=3.7 pandas jupyter seaborn scikit-learn keras tensorflowto create an environment named myenv. For additional information about creating and managing Anaconda environments, see the Anaconda documentation.Next, create a folder in a convenient location to serve as your VS Code workspace for the tutorial, name it

hello_ds.Open the project folder in VS Code by running VS Code and using the File > Open Folder command.

Once VS Code launches, open the Command Palette (View > Command Palette or ⇧⌘P (Windows, Linux Ctrl+Shift+P)). Then select the Python: Select Interpreter command:

The Python: Select Interpreter command presents the list of available interpreters that VS Code was able to locate automatically (your list will vary from the one shown below; if you don't see the desired interpreter see Configuring Python environments). From the list, select the Anaconda environment you created, which should include the text 'myenv': conda.

With the environment and VS Code setup, the final step is to create the Jupyter notebook that will be used for the tutorial. Open the Command Palette (⇧⌘P (Windows, Linux Ctrl+Shift+P)) and select Jupyter: Create New Blank Jupyter Notebook.

Note: Alternatively, from the VS Code File Explorer, you can use the New File icon to create a Notebook file named

hello.ipynb.Use the Save icon on the main notebook toolbar to save the notebook with the filename

hello.After your file is created, you should see the open Jupyter notebook in the native notebook editor. For additional information about native Jupyter notebook support, see this section of the documentation.

Prepare the data

This tutorial uses the Titanic dataset available on OpenML.org, which is obtained from Vanderbilt University's Department of Biostatistics at http://biostat.mc.vanderbilt.edu/DataSets. The Titanic data provides information about the survival of passengers on the Titanic, as well as characteristics about the passengers such as age and ticket class. Using this data, the tutorial will establish a model for predicting whether a given passenger would have survived the sinking of the Titanic. This section shows how to load and manipulate data in your Jupyter notebook.

To begin, download the Titanic data from OpenML.org as a csv file named

data.csvand save it to thehello_dsfolder that you created in the previous section.In VS Code, open the

hello_dsfolder and the Jupyter notebook (hello.ipynb), by going to File > Open Folder.Within your Jupyter notebook begin by importing the pandas and numpy libraries, two common libraries used for manipulating data, and loading the Titanic data into a pandas DataFrame. To do so, copy the below code into the first cell of the notebook. For additional guidance about working with Jupyter notebooks in VS Code, see the Working with Jupyter Notebooks documentation.

Now, run the cell using the Run cell icon or the Shift+Enter shortcut.

After the cell finishes running, you can view the data that was loaded using the variable explorer and data viewer. First click on the chart icon in the notebook's upper toolbar, then the data viewer icon to the right of the

datavariable. For additional information about the data set, refer to this document about how it was constructed.You can then use the data viewer to view, sort, and filter the rows of data. After reviewing the data, it can then be helpful to graph some aspects of it to help visualize the relationships between the different variables.

Before the data can be graphed though, you need to make sure that there aren't any issues with it. If you look at the Titanic csv file, one thing you'll notice is that a question mark ('?') was used to designate cells where data wasn't available.

While Pandas can read this value into a DataFrame, the result for a column like Age is that its data type will be set to Object instead of a numeric data type, which is problematic for graphing.

This problem can be corrected by replacing the question mark with a missing value that pandas is able to understand. Add the following code to the next cell in your notebook to replace the question marks in the age and fare columns with the numpy NaN value. Notice that we also need to update the column's data type after replacing the values.

Tip: To add a new cell you can use the insert cell icon that's in the bottom left corner of an existing cell. Alternatively, you can also use the Esc to enter command mode, followed by the B key.

Note: If you ever need to see the data type that has been used for a column, you can use the DataFrame dtypes attribute.

Now that the data is in good shape, you can use seaborn and matplotlib to view how certain columns of the dataset relate to survivability. Add the following code to the next cell in your notebook and run it to see the generated plots.

Note: To better view details on the graphs, you can open them in plot viewer by hovering over the upper left corner of the graph and clicking the button that appears.

These graphs are helpful in seeing some of the relationships between survival and the input variables of the data, but it's also possible to use pandas to calculate correlations. To do so, all the variables used need to be numeric for the correlation calculation and currently gender is stored as a string. To convert those string values to integers, add and run the following code.

Now, you can analyze the correlation between all the input variables to identify the features that would be the best inputs to a machine learning model. The closer a value is to 1, the higher the correlation between the value and the result. Use the following code to correlate the relationship between all variables and survival.

Looking at the correlation results, you'll notice that some variables like gender have a fairly high correlation to survival, while others like relatives (sibsp = siblings or spouse, parch = parents or children) seem to have little correlation.

Let's hypothesize that sibsp and parch are related in how they affect survivability, and group them into a new column called 'relatives' to see whether the combination of them has a higher correlation to survivability. To do this, you will check if for a given passenger, the number of sibsp and parch is greater than 0 and, if so, you can then say that they had a relative on board.

Use the following code to create a new variable and column in the dataset called

relativesand check the correlation again.You'll notice that in fact when looked at from the standpoint of whether a person had relatives, versus how many relatives, there is a higher correlation with survival. With this information in hand, you can now drop from the dataset the low value sibsp and parch columns, as well as any rows that had NaN values, to end up with a dataset that can be used for training a model.

Note: Although age had a low direct correlation, it was kept because it seems reasonable that it might still have correlation in conjunction with other inputs.

Train and evaluate a model

With the dataset ready, you can now begin creating a model. For this section you'll use the scikit-learn library (as it offers some useful helper functions) to do pre-processing of the dataset, train a classification model to determine survivability on the Titanic, and then use that model with test data to determine its accuracy.

A common first step to training a model is to divide up the dataset into training and validation data. This allows you to use a portion of the data to train the model and a portion of the data to test the model. If you used all your data to train the model, you wouldn't have a way to estimate how well it would actually perform against data the model has not yet seen. A benefit of the scikit-learn library is that it provides a method specifically for splitting a dataset into training and test data.

Add and run a cell with the following code to the notebook to split up the data.

Next, you'll normalize the inputs such that all features are treated equally. For example, within the dataset the values for age range from ~0-100, while gender is only a 1 or 0. By normalizing all the variables, you can ensure that the ranges of values are all the same. Use the following code in a new code cell to scale the input values.

There are a number of different machine learning algorithms that you could choose from to model the data and scikit-learn provides support for a number of them, as well as a chart to help select the one that's right for your scenario. For now, use the Naïve Bayes algorithm, a common algorithm for classification problems. Add a cell with the following code to create and train the algorithm.

With a trained model, you can now try it against the test data set that was held back from training. Add and run the following code to predict the outcome of the test data and calculate the accuracy of the model.

Looking at the result of the test data, you'll see that the trained algorithm had a ~75% success rate at estimating survival.

(Optional) Use a neural network to increase accuracy

A neural network is a model that uses weights and activation functions, modeling aspects of human neurons, to determine an outcome based on provided inputs. Unlike the machine learning algorithm you looked at previously, neural networks are a form of deep learning wherein you don't need to know an ideal algorithm for your problem set ahead of time. It can be used for many different scenarios and classification is one of them. For this section, you'll use the Keras library with TensorFlow to construct the neural network, and explore how it handles the Titanic dataset.

The first step is to import the required libraries and to create the model. In this case, you'll use a Sequential neural network, which is a layered neural network wherein there are multiple layers that feed into each other in sequence.

After defining the model, the next step is to add the layers of the neural network. For now, let's keep things simple and just use three layers. Add the following code to create the layers of the neural network.

- The first layer will be set to have a dimension of 5, since you have 5 inputs: sex, pclass, age, relatives, and fare.

- The last layer must output 1, since you want a 1-dimensional output indicating whether a passenger would survive.

- The middle layer was kept at 5 for simplicity, although that value could have been different.

The rectified linear unit (relu) activation function is used as a good general activation function for the first two layers, while the sigmoid activation function is required for the final layer as the output you want (of whether a passenger survives or not) needs to be scaled in the range of 0-1 (the probability of a passenger surviving).

You can also look at the summary of the model you built with this line of code:

Once the model is created, it needs to be compiled. As part of this, you need to define what type of optimizer will be used, how loss will be calculated, and what metric should be optimized for. Add the following code to build and train the model. You'll notice that after training the accuracy is ~80%.

Note: This step may take anywhere from a few seconds to a few minutes to run depending on your machine.

With the model built and trained its now time to see how it performs against the test data.

Similar to the training, you'll notice that you were able to get close to 80% accuracy in predicting survival of passengers. This result was better than the 75% accuracy from the Naive Bayes Classifier tried previously.

Next steps

Now that you're familiar with the basics of performing machine learning within Visual Studio Code, here are some other Microsoft resources and tutorials to check out.

- Learn more about working with Jupyter Notebooks in Visual Studio Code (video).

- Get started with Azure Machine Learning for VS Code to deploy and optimize your model using the power of Azure.

- Find additional data to explore on Azure Open Data Sets.